First, a bit of history.

Since the beginning of computing, optimisation has been an important part of writing software. In the very beginning, computers had an extremely limited (compared to modern computers) memory, and entering the program was done by flipping switches on a panel. Optimising for size was an absolute necessity at the time: the program needed to be small enough to fit in the computers so it could even run, and to then reduce the time required to enter it.

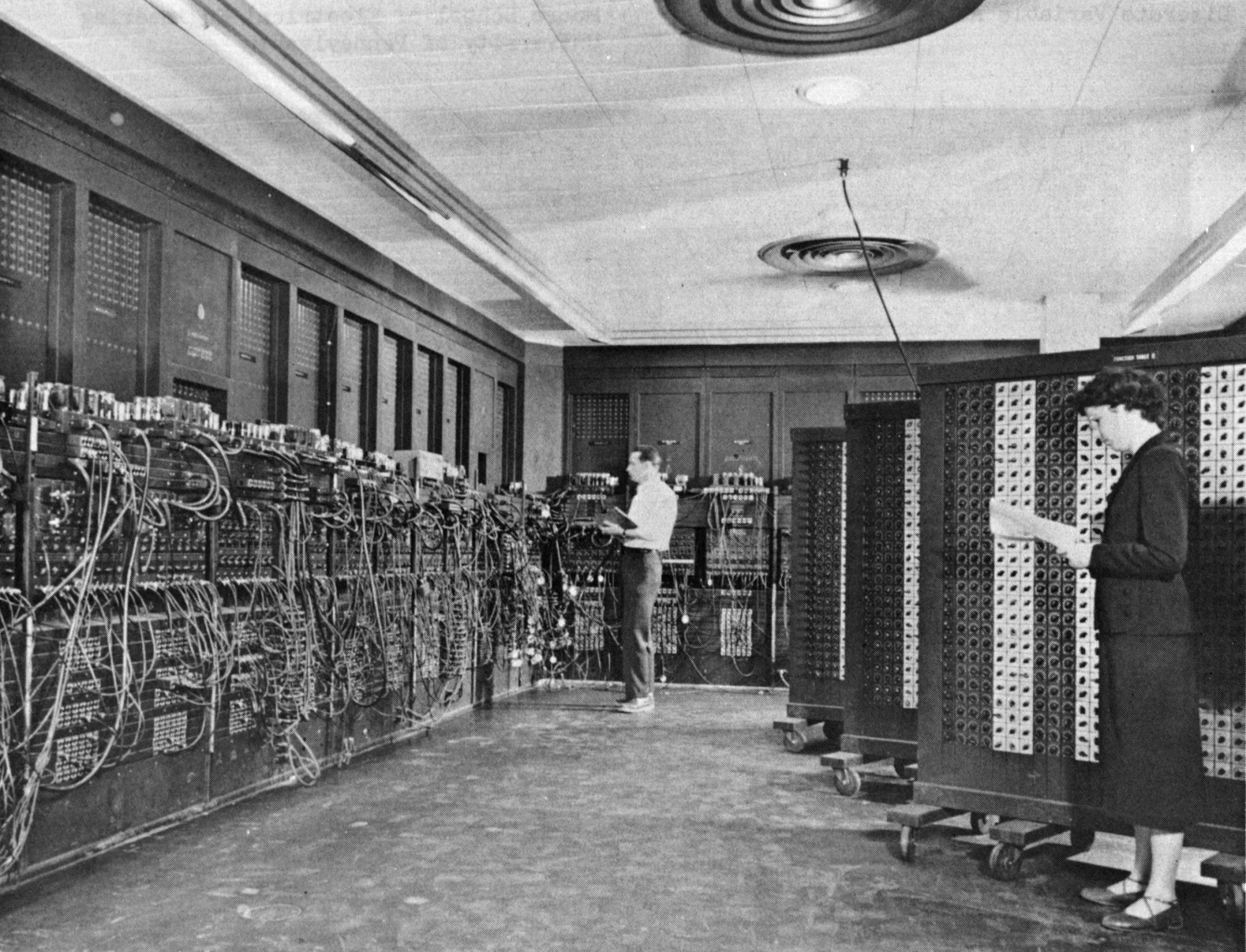

Eniac computer – By Unknown author – U.S. Army Photo, Public Domain

Later, in the age of mainframe computers, computing time was charged by the minute and second, so a lot of effort went into making the software run as quickly as possible to reduce costs from the shared mainframe computer.

In the 1980s, the first PCs entered the market, and optimisations both for size and speed were still widely done as the hardware was quite expensive at the time and it was important for businesses to use it as efficiently as possible. Later models featured faster and faster processors and more and more memory, so speed of development started to become more important than how efficiently the software ran. The end result of this can be seen in today’s desktop software. From 1982 until 2022, performance of an average office computer has increased 5,000-10,000 fold, but even disregarding keyboard input, does software feel 5,000 times faster today?

At about the same time as the first PCs, home computers such as the Commodore 64, Atari, Spectrum, BBC Micro, and others also became popular. A major type of software for these (some might say THE major type) were games, and this is mainly where optimisation lived on through the following years. It was important to game developers to make sure their games could run on the hardware of the day, and there was great competition in making the games look as spectacular as possible. Even now in the 2020s, games are some of the most intensely optimised software to be found.

Today

But is it still necessary in today’s world of cloud computing with, what is for all intents and purposes, boundless computing resources available at the click of a button?

While optimising for size is not as useful in this context, optimising for performance is still highly relevant even today. Keep in mind that optimising for performance is also optimising for efficiency, as it lets you get the most out of your existing computing resources and committed cost.

There are situations where performance can be increased simply by throwing more hardware at the problem, and this is very easy and tempting to do in a cloud environment. However, the extra hardware carries an additional cost. This is a cost that you incur every day and month as long as the additional hardware is deployed. Over time this can add up to a significant amount, increasing the total lifetime cost of your solution over an optimisation scenario (which incurs an upfront cost and results in lower ongoing costs).

The two main outcomes of optimising your software are:

- Reducing the load on your service, thus letting you do more work without having to add more hardware.

- Reducing the time spent waiting for requests to your service to be processed and a response returned to the user or customer. This, in turn, leads to less frustration for the consumer of the service.

What kinds of software optimisations would typically be done?

Software code, whether written in C#, Java, Javascript, or other languages, can be reworked to run more efficiently by careful planning of data structures to improve compactness and memory access patterns. This takes advantage of parallel data processing instructions and multiple processors, and reorganises the code to reduce the number of calls to subroutines and many other techniques. Entire books have been written on the subject.

Database queries can be optimised by making sure the queries only retrieve the specific data that is required, applying sorting only if necessary, and by adding or restructuring indices on the relevant tables to support the most frequent queries. The order of fields in each index is also highly important. As database queries tend to be processed by a different service from that which runs your code, there is also an optimisation opportunity in making each query do more, using fewer queries as a result.

Depending on your software, it may even be possible to avoid using traditional, relational databases altogether. Modern alternatives exist, and are generally known as NoSQL, and these can be significantly faster than a relational database on a number of (rather simple) queries.

Should you still be optimising for size?

With all of this, is there any situation where optimising for size is still relevant?

Certainly, but these days it is more of a niche requirement, mainly when programming microcontrollers. Microcontrollers are processors that contain built-in input, output, and timing circuits, and are built into devices rather than computers. These come in many sizes and performance levels, but towards the low end is a device like the ATtiny series (a very suitable name) which start at 2 KB of program memory and 128 bytes of RAM;. Writing code for it takes some special care and consideration!